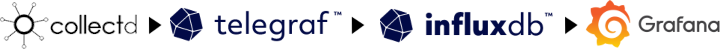

Collectd to InfluxDB v2 with downsampling using Tasks

In a previous blog post I wrote about collecting server statistics with Collectd, passing them to InfluxDB and graphing them with Grafana. Using “Retention Policies” (RPs) and “Continuous Queries” (CQs) metrics could be downsampled to reduce storage usage. This blog post shows how to do the same with InfluxDB v2. InfluxDB v2 At the end of 2020 InfluxDB v2 was released. Moving to InfluxDB v2 with the setup I had (Collectd input, downsampling) came with some challenges:...