A couple of manufacturers are selling solutions to speed up your big HDD using a relative small SSD. There are techniques like:

- Smart Response Technology (SRT) by Intel (hardware solution, Write-back)

- Dataplex by Nvelo (Windows, software, Write-back)

- ReadyCache by SanDisk (Windows, software, Writethrough)

- FancyCache by Romex Software (Windows, software)

But there is also a lot of development on the Linux front. There is Flashcache developed by Facebook, dm-cache by VISA and bcache by Kent Overstreet. The last one is interesting, because it’s a patch on top of the Linux kernel and will hopefully be accepted upstream some day.

Hardware setup

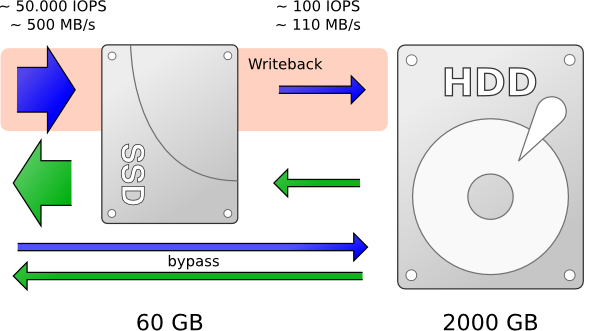

In my low power homeserver I use a 2TB Western Digital Green disk (5400 RPM). To give bcache a try I bought a 60GB Intel 330 SSD. Some facts about these data-carriers. The 2TB WD can do about 110 MB/s of sequential reads/writes. This traditional HDD does about 100 random operations per second. The 60GB Intel 330 can sequentially read about 500 MB/s and write about 450 MB/s. Random reads are done in about 42.000 operations per second, random writes in about 52.000. The SSD is much faster!

The image below shows the idea of SSD caching. Frequently accessed data is cached on the SSD to gain better read performance. Writes can be cached on the SSD using the writeback mechanism.

Prepare Linux kernel and userspace software

To be able to use bcache, there are 2 things needed:

- A bcache patched kernel

- bcache-tools for commands like make-bcache and probe-bcache

I used the latest available 3.2 kernel. The bcache-3.2 branch from Kent’s git repo merged successfully. Don’t forget to enable the BCACHE module before compiling.

On my low power home server I use Debian. Since there is no bcache-tools Debian package available yet, I created my own. Fortunately damoxc already packaged bcache-tools for Ubuntu once.

Debian package: pommi.nethuis.nl/…/bcache/

Git web: http://git.nethuis.nl/?p=bcache-tools.git;a=summary

Git: http://git.nethuis.nl/pub/bcache-tools.git

Bcache setup

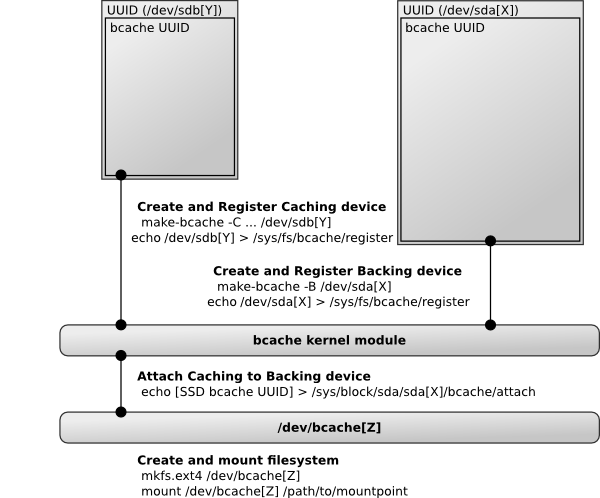

Unfortunately bcache isn’t plug-and-play. You can’t use bcache with an existing formatted partition. First you have to create a caching device (SSD) and a backing device (HDD) on top of two existing devices. Those devices can be attached to each other to create a /dev/bcache0 device. This device can be formatted with your favourite filesystem. The creation of a caching and backing device is necessary because it’s a software implementation. Bcache needs to know what is going on. For example when booting, bcache needs to know what devices to attach to each other. The commands for this procedure are shown in the image below.

After this I had a working SSD caching setup. Frequently used data is stored on the SSD. Accessing and reading frequently used files is much faster now. By default bcache uses writethrough caching, which means that only reads are cached. Writes are being written directly to the backing device (HDD).

To speed up the writes you have to enable writeback caching. But you have to take in mind, there is a risk of losing data when using a writeback cache. For example when there is a power failure or when the SSD dies. Bcache uses a fairly simple journalling mechanism on the caching device. In case of a power failure bcache will try to recover the data. But there is a chance you will end up with corruption.

echo writeback > /sys/block/sda/sda[X]/bcache/cache_mode

When writes are cached on the caching device, the cache is called dirty. The cache is clean again, when all cached writes have been written to the backing device. You can check the state of the writeback cache via:

cat /sys/block/sda/sda[X]/bcache/state

To detach the caching device from the backing device run the command below (/dev/bcache0 will still be available). This can take a while when the write cache contains dirty data, because it must be written to the backing device first.

echo 1 > /sys/block/sda/sda[X]/bcache/detach

Attach the caching device again (or attach another caching device):

echo [SSD bcache UUID] > /sys/block/sda/sda[X]/bcache/attach

Unregister the caching device (can be done with or without detaching) (/dev/bcache0 will still be available because of the backing device):

echo 1 > /sys/fs/bcache/[SSD bcache UUID]/unregister

Register the caching device again (or register another caching device):

echo /dev/sdb[Y] > /sys/fs/bcache/register

Attach the caching device:

echo [SSD bcache UUID] > /sys/block/sda/sda[X]/bcache/attach

Stop the backing device (after unmounting /dev/bcache0 it will be stopped and removed, don’t forget to unregister the caching device):

echo 1 > /sys/block/sda/sda[X]/bcache/stop

Benchmark

To benchmark this setup I used two different tools. Bonnie++ and fio – Flexible IO tester.

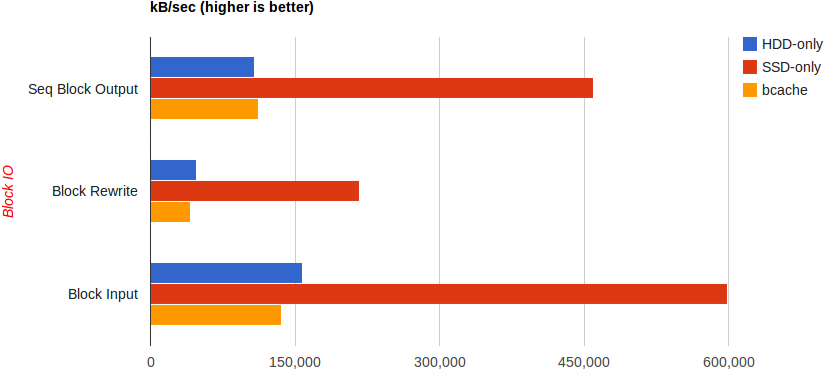

Bonnie++

Unfortunately Bonnie++ isn’t that well suited to test SSD caching setups.

This graph shows that I’m hitting the limit on sequential input and output in the HDD-only and SSD-only tests. The bcache test doesn’t show much difference to the HDD-only test in this case. Bonnie++ isn’t able to warm up the cache and all sequential writes are bypassing the write cache.

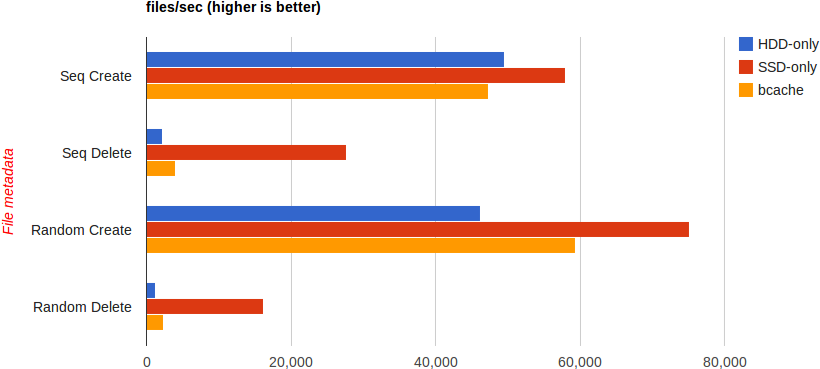

In the File metadata tests the performance improves when using bcache.

fio

The Flexible IO tester is much better to benchmark these situations. For these tests I used the ssd-test example jobfile and modified the size parameter to 8G.

HDD-only:

seq-read: io=4084MB, bw=69695KB/s, iops=17423, runt= 60001msec

rand-read: io=30308KB, bw=517032B/s, <strong>iops=126</strong>, runt= 60026msec

seq-write: io=2792MB, bw=47642KB/s, iops=11910, runt= 60001msec

rand-write: io=37436KB, bw=633522B/s, <strong>iops=154</strong>, runt= 60510msec

SSD-only:

seq-read: io=6509MB, bw=110995KB/s, iops=27748, runt= 60049msec

rand-read: io=1896MB, bw=32356KB/s, iops=8088, runt= 60001msec

seq-write: io=2111MB, bw=36031KB/s, iops=9007, runt= 60001msec

rand-write: io=1212MB, bw=20681KB/s, iops=5170, runt= 60001msec

bcache:

seq-read: io=4127.9MB, bw=70447KB/s, iops=17611, runt= 60001msec

rand-read: io=262396KB, bw=4367.8KB/s, <strong>iops=1091</strong>, runt= 60076msec

seq-write: io=2516.2MB, bw=42956KB/s, iops=10738, runt= 60001msec

rand-write: io=2273.4MB, bw=38798KB/s, <strong>iops=9699</strong>, runt= 60001msec

In these tests the SSD is much faster with random operations. With the use of bcache random operations are done a lot faster in comparison to the HDD-only tests. It’s interesting that I’m not able to hit the sequential IO limits of the HDD and SSD in these tests. I think this is because my CPU (Intel G620) isn’t powerful enough for these tests. fio hits the IO limits of the SSD in an another machine with a Intel i5 processor.